| Drift(s) | TY Precise | TY Recall | TY F-Score | CSP Precise | CSP Recall | CSP F-Score | RN Precise | RN Recall | RN F-Score |

|---|---|---|---|---|---|---|---|---|---|

| Adrift-027 | 0.80 | 0.55 | 0.65 | 0.58 | 0.80 | 0.67 | 0.45 | 0.72 | 0.55 |

| Adrift-053-063 | 0.73 | 0.69 | 0.71 | 0.63 | 0.82 | 0.72 | 0.48 | 0.79 | 0.60 |

Fin Whale AI

Deep Learning to Detect and Classify Fin Whale Calls

OSA was tasked with processing low frequency drifting recorder data for the explicit purpose of identifying the occurrence of 40 Hz calls from fin whales and calls from sei whales. A related analytical objective was to develop an improved method of detecting fin whale low-frequency calls within obscure acoustic environments using a deep learning approach. Using a deep learning network development and detection tool DeepAcoustics, we iteratively tested ideal image and network parameters for the calls procured from the data review process. Network development encompassed training with both 20 Hz and 40 Hz whale call types and resulted in successful detection despite excessive instrument noise within the dataset.

Data were evaluated in two ways, by assessing performance in comparison to an annotated test file and by comparing the network detection performance to our semi-automated PAMGuard processing approach, which involves a human in the loop to classify calls and assign to an acoustic encounter. After identifying 40 Hz and 20 Hz calls in the PAMGuard approach, we annotated approximately 1,400 calls in Raven to include in network training. Annotations of 20 Hz calls from another dataset were included to increase sample size.

Three network architectures were evaluated: tiny YOLO (You Only Look Once), CSP-DarkNet-53, and the ResNet-50. We tested performance using a separate set of annotated calls and assessed performance in the absence of vocalizations with varying degrees of instrument noise. Extensive instrument noise and small sample size contribute to performance metrics; however, we considered these results favorable considering the degree of noise (see table, below).

When incorporating false positive rates in the evaluation, the tiny YOLO and CSP-DarkNet-53 demonstrate the additional benefit of deep network development (see OSA Report in Github Repository).

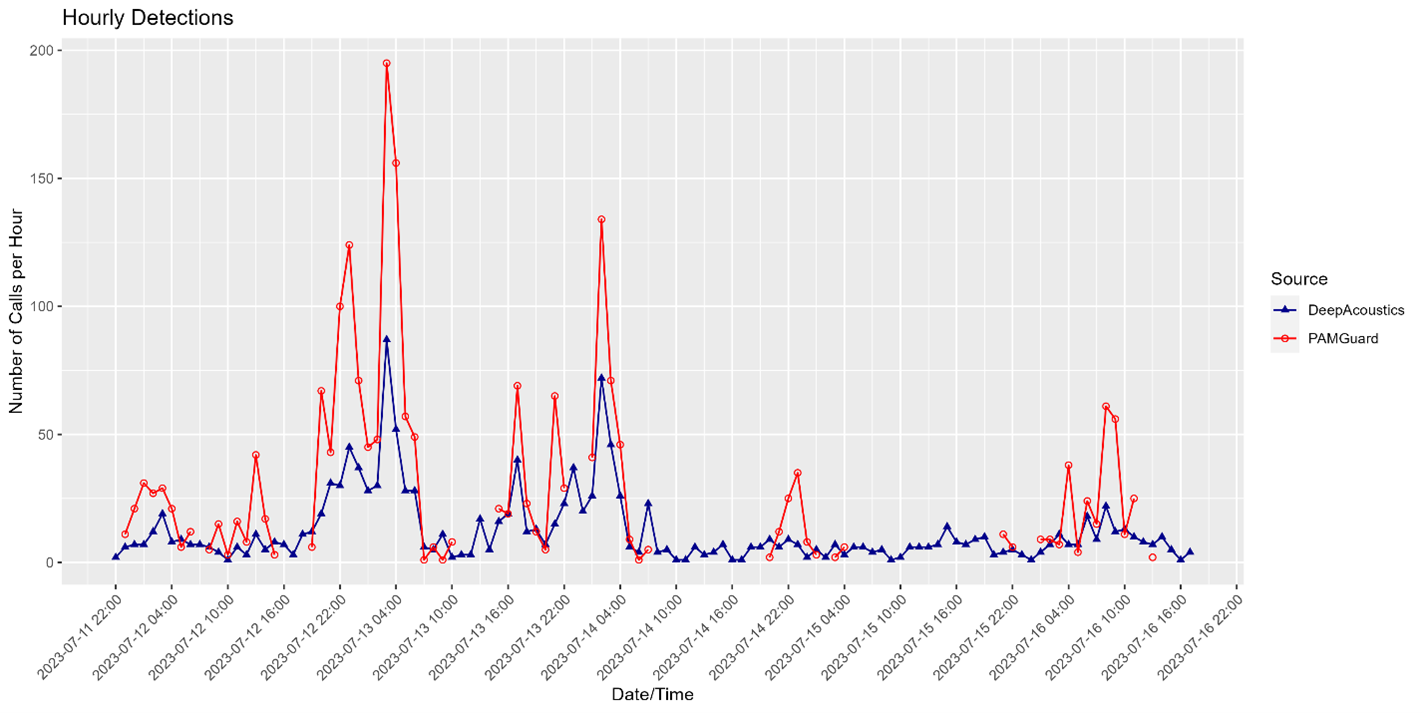

The next step was to evaluate the performance of the network on a larger dataset, as the aim of network development is to derive a model that can process a dataset both quickly and accurately. The Adrift-083 dataset was selected because it contained fin whale 40 Hz calls and blue whale D calls. In the figure below, both types of calls are included in the PAMGuard annotations, and blue whale D calls are known to result in false positives for this version of the network. Humpback whale social calls were also present but were not annotated in our review (thus not represented in these figures). Over the seven-day period of this drift, the detection pattern by the PAMGuard method (approximately six hours to process) was matched by the detection pattern of DeepAcoustics (approximately 30 minutes to process). A low false positive rate during periods without calls was consistent across the drift (Figure 1).

Deep learning is indispensable for managing the immense volumes of acoustic data, facilitating efficient processing and precise analysis of extensive datasets. This capability is critical for fulfilling the monitoring requirements of organizations such as NOAA and BOEM, ensuring timely and thorough assessment of marine environments and protected species.

Future research should consider further enhancing network performance by integrating multi-class training for blue whale D calls and expanding the sample size of the training dataset. Research should consider developing networks tailored for challenging calls like these, as well as for additional species, to make them accessible for public use. OSA is also collaborating with the PAMGuard software developers to enable the integration of DeepAcoustics models into their detection platform. Future funding should consider processing archival data in BOEM repository using the developed networks.